AI adoption is not just a technology project — it’s a business transformation. Success requires aligning business value with data readiness, educating executives, auditing processes, investing in scalable AI platforms, leveraging open source, and ensuring continuous monitoring and cultural alignment. This roadmap provides a phased approach to help enterprises adopt AI responsibly and at scale.

AI transformation requires more than tools — it needs strategy, leadership, and cultural change. This roadmap breaks down the journey to becoming an AI-first enterprise in six clear stages.

You know you need to do something about AI, but where do you start? The pressure is real: competitors are moving fast, employees are experimenting on their own, and vendors are promising the moon.

If you wait too long, you risk falling behind in ways that will be tough to recover from. The mistake we often see organizations make is thinking of AI as yet another technology adoption cycle. You just find and buy the right software, roll it out, and check the box, right?

No. AI doesn't work that way. It is not only about tools or platforms. It is about how you run your business, how you use your data, and how your people adapt.

And I get it, this can be pretty overwhelming. Fortunately, when you break things down, it's pretty straightforward to tackle. It's not that there's a "one-size-fits-all prescription" to get you there, it's just that we can break it down into a clear, phase framework you can adapt to your own company to understand how to think about building an AI transformation process.

There are six key facets to the process. And because both the technology and your organization will change over time as your business and your customers learn, these facets can set you and your organization on a path where AI turns the wheels.

- Business Value Anchoring and Data Readiness

- Executive AI Fluency Assessment

- Comprehensive Process Audit and Opportunity Mapping

- AI Agents Platform Requirements

- Open Source Strategy and Implementation

- Quality, Monitoring, Continuous Improvement, and Scaling

Stage 0: Anchoring Business Value with Data Readiness

Before you survey executives or audit processes, it's important to make sure you've got a good foundation. You need to make sure everything you're doing is connected to what your customers value and that you have the appropriate data, all within the framework of top and bottom line finances.

Business Value

Every initiative should connect to objective outcomes: increasing revenue, reducing costs, improving customer experience, or lowering risk (to name the most prominent). If you can't tie a use case to a value stream, it will die in committee or crumble after the first prototype -- if you're lucky.

Data

Alongside value, you need to look at your data, the fuel that makes AI possible. Chances are you've got no shortage of data, but most companies have quality, accessibility, or compliance issues that will block progress if you don't address them.

Start with a simple inventory. Ask yourself:

- What data do you have?

- Where is it stored?

- Who owns it?

- What regulations apply to it?

Do not try to boil the ocean, but make sure you aren't building on sand.

Finances

Before you begin, you need a basic financial plan. What are you willing to spend on pilots versus enterprise rollouts? Be clear about CapEx versus OpEx and how you will justify spend to the CFO. Remember that AI budgets will look different from traditional IT budgets because the work is more iterative.

Finally, accept that technology will change fast. Build in sustainability by planning for upgrades, replacements, and avoiding technical debt.

Stage 1: Executive AI Fluency Assessment

No transformation can succeed if leadership doesn't understand what they're signing up for. You don't need every executive to become a data scientist, but they have to understand what AI can and (maybe even more importantly) can't do, what risks it carries, and how it connects to their business area.

Executive interviews

Run a survey or interview process to get a baseline. Ask simple but telling questions:

- How do you see AI impacting your department in the next three years?

- What do you believe AI's limits are? What can't it do?

- What capabilities are you most excited about?

- What risks are you most worried about?

You will quickly see where the gaps and misconceptions are.

Creating a Plan

Once you know where you stand, you can create a plan to educate and align your leadership team. Some things to think about include:

- Identify champions who will push adoption and skeptics who may slow it down.

- Build a shared vocabulary so people are not talking past each other.

- Make governance part of this stage too. Executives need to understand compliance and ethics issues early.

- Think about structure. Many companies set up an AI Center of Excellence or a cross-functional steering group to drive progress without duplicating effort.

Stage 2: Comprehensive Process Audit and Opportunity Mapping

Once leadership is aligned, you can look at the business processes. The goal is to find where AI and automation can add value, and how that value compares to the effort and risk to make it happen.

Process Audit

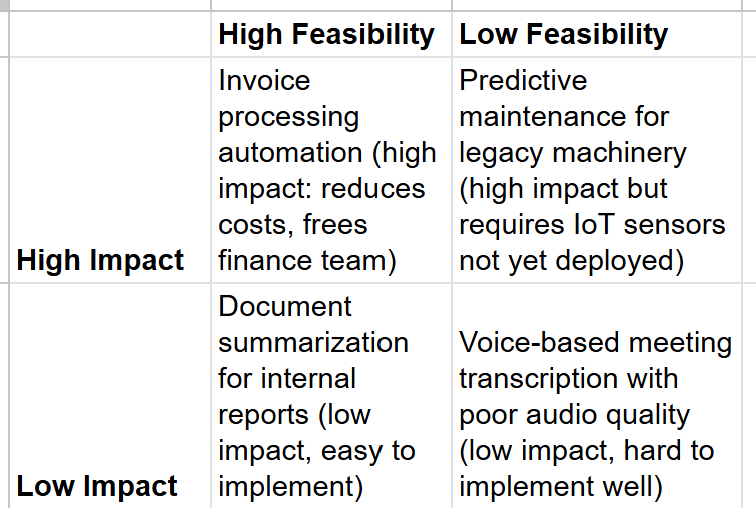

Map your processes systematically. For each one, assess both technical feasibility and business impact. This gives you a two-by-two grid that helps you focus on high-impact, high-feasibility areas first.

So in this example, you'd start with invoice processing automation, then reassess whether predictive maintenance has become more feasible.

ROI and Level of Effort

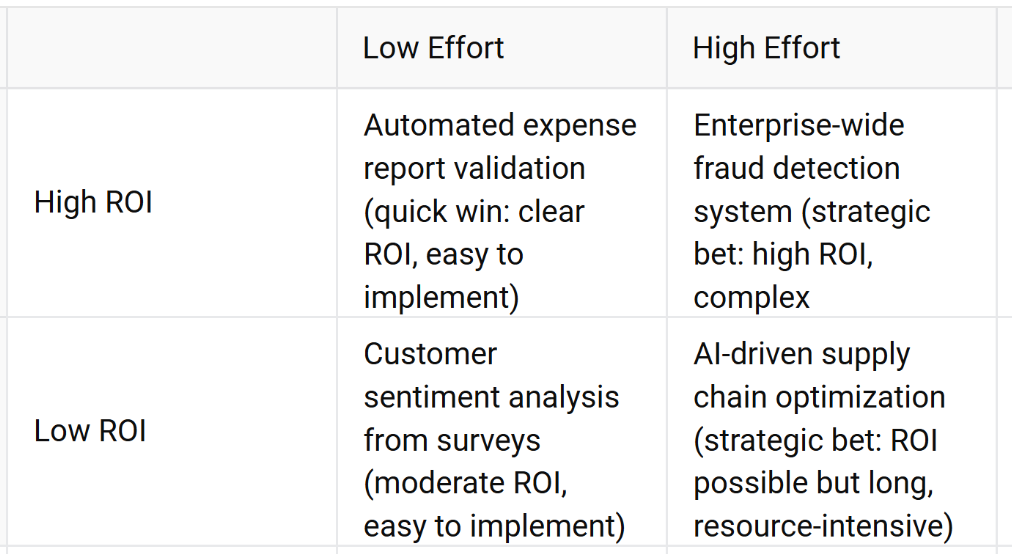

Evaluating ROI and level of effort is a similar process. Some opportunities will be quick wins. Others will be long-term strategic bets. Keep both in view, but start by filling a pipeline of initiatives ranked by value and effort. Do not ignore risks; security, compliance, and operational disruption need to be part of the calculation.

In this case you start with quick wins such as automated expense report validation. Then, as you gain experience (and momentum) tackle more strategic tasks such as enterprise-wide fraud detection.

Run pilots, but think ahead

Before rolling anything out broadly, run pilots. Pick a small, low-risk process where you can test assumptions and show early results. These initial pilots are about completing projects, sure, but they are also important to get established before you scale. Use them to refine your ROI models and build internal champions.

Essentially, you want to treat them like sandboxes where you can fail safely and learn. At the same time, sketch a scaling roadmap. Which departments will you move into after these pilots, and in what order?

Thinking ahead prevents isolated wins from staying isolated.

Stage 3: AI Agents Platform Requirements

If you want AI to scale, you need infrastructure to manage it. An AI agents platform is not just another tool. Over time, your AI agents platform will become as important as your ERP. (Maybe even more.) It is your control board for orchestrating, monitoring, and scaling AI systems.

At a minimum, the platform should support multi-agent coordination, workflow management, and integration with existing systems. It must handle monitoring, security, and compliance at an enterprise level. Think about audit trails, role-based access, and scalability. These are not nice-to-haves. They are table stakes.

Vendors will promise a lot; evaluate what they're saying in the context of your business. In other words, your evaluation should focus not just on what it does, but on how well the platform will work in your ecosystem. Can it integrate with your current stack? Does it prevent silos or create new ones? What is the trade-off between flexibility and lock-in? Some lock-in may be acceptable for speed, but know your exit strategy.

Finally, decide who will own the platform day to day. Is it IT? Data? A dedicated AI team? In many cases, you'll be best off with a Managed Service Provider that can take the management off your team so they can focus on building functionality. Clarity of ownership matters.

And while you're thinking about this platform, keep in mind that open source should be a large part of your solution.

Stage 4: Open Source Strategy and Implementation

For most organizations, open source should be your foundation. It gives you control, transparency, and freedom from lock-in, but as the saying goes, "With great power comes great responsibility."

The Open Source Advantage

Open source should be the starting point for most AI transformations. It gives you transparency, flexibility, and control in a way that commercial “black box” offerings rarely do. Tools like LangBuilder and LangChain let you build and extend solutions quickly without waiting on vendor roadmaps. They also lower your exposure to lock-in, which is a real risk when commercial providers change pricing or API terms.

Implementation Essentials

To succeed, you need teams comfortable working with open source: developers who can extend frameworks, DevOps staff who can operationalize them, and governance processes to manage updates. Integration with your current systems should be planned from the start, along with a realistic timeline. Open source is powerful, but it requires discipline to get from experiments to production. In some cases this means finding or upskilling your staff, but it's often more effective to work with an implementation partner (such as CloudGeometry).

Compliance and Trust Considerations

This is where open source adoption can fail if you are not careful. Treat compliance and trust as first-class requirements. Bake in explainability, bias detection, audit trails, and human oversight from the beginning. Align projects with your enterprise risk policies so you don't have to retrofit controls later. Open source doesn't mean unmanaged or unsafe; in many cases open source software is better than its commercial counterparts. It just means you and your implementation partners own the responsibility to build responsibly.

Stage 5: Quality, Monitoring, Continuous Improvement, and Scaling

It may feel like the finish line, but getting a system into production is only the start of the process. Maintaining and improving gains means monitoring systems and making improvements where necessary.

Ongoing monitoring

AI systems don't stay static. A model that performs well in the lab can behave very differently once you expose it to real-world inputs, requiring you to find and correct the problem. Even if the model works perfectly in the real world, data changes, user behavior shifts, and models degrade over time. That means monitoring is not optional. You need to continuously check for accuracy, bias, explainability, and reliability. Treat QA for AI as an ongoing discipline, not a one-time sign-off.

Define key performance indicators before deployment and track them from day one. These should include technical measures such as latency, error rates, and drift, but also business outcomes such as process efficiency, cost savings, or customer satisfaction. Automate monitoring wherever possible so that issues get flagged early and teams can respond quickly. If KPIs show that performance is slipping, be prepared to retrain or fine-tune models.

This cycle of monitor, evaluate, and adjust is what separates sustainable AI adoption from one-off experiments. Without it, you risk silent failures that erode trust and value. With it, you create a system that improves over time and continues to justify its place in your operations.

Ongoing operations

Many companies underestimate the operational burden that comes with being an AI-first organization. For some organizations, the most practical approach is to outsource part or all of this burden. Managed services providers can handle tasks like monitoring, retraining, optimization, and even compliance reporting. This can often be cheaper than hiring or upskilling in-house talent, especially in a tight labor market.

The right balance depends on your budget, your ability to recruit and retain technical talent, and how much risk you want to keep in-house. Some companies keep strategic oversight and governance internally but rely on specialists for day-to-day operations. Others prefer to build the entire capability themselves.

What matters most is making this decision intentionally from the beginning, rather than waiting until problems pile up.

Scaling

Scaling AI beyond a handful of pilots is where many companies stumble. Pilots are often built in isolation, led by motivated teams using whatever tools they can get working quickly. That's fine for proving a concept, but when you try to expand across departments, those differences in approach become barriers. Without governance, every group risks reinventing the wheel, choosing incompatible platforms, or duplicating work. This slows progress, drives up costs, and creates a patchwork of systems that are hard to manage or secure.

To avoid this problem, you need coordination at the enterprise level. Establish shared infrastructure, common data practices, and cross-department standards for how AI systems are developed, deployed, and monitored. Governance should not be heavy-handed, but it should provide enough structure to prevent fragmentation. Think of it as creating a backbone that teams can plug into while still having room to innovate locally. Done well, this makes scaling smoother, faster, and far less expensive, because each new initiative builds on what is already in place instead of starting from scratch.

Measuring Success

Success in implementing AI isn't just about whether the model runs correctly. Technical metrics such as accuracy, latency, and uptime are important, but they only tell part of the story. A model can be technically sound, but if employees don't use it, customers ignore it, or the outcomes don't align with business goals, it's not providing value. That's why adoption rates, user satisfaction, and alignment with business KPIs should be part of every measurement plan. Tracking these softer metrics highlights whether the technology is actually being embraced and making a difference.

Remember that a balanced scorecard approach is useful here. Combine technical measures with business and user-focused indicators so executives see the full picture. For example, alongside error rates and system uptime, include metrics like time saved per transaction, reduction in manual work, revenue uplift, or customer satisfaction scores.

Present these metrics together in a consistent format so leadership can easily connect the dots between system performance and business impact. This builds trust in the program and makes it easier to secure continued investment and support.

Culture, Change and Resistance

Culture is often the deciding factor in whether AI transformation succeeds or stalls. If employees believe AI is simply a cost-cutting tool that will eliminate their jobs, resistance will grow quietly but powerfully. Rumors spread, adoption slows, and even well-designed systems may go unused. Prevent this problem from the beginning with clear communication and training. These are people; they need to understand how AI fits into their roles, what tasks it will change, and how it can make their work easier rather than replacing them outright. Training should be practical, giving employees hands-on experience so they gain confidence and see how it can make their lives better.

You have to treat change management as a core component of the program, not a side activity. Define clear roles and responsibilities that show how employees continue to add value alongside AI systems. Share success stories where teams have used AI to achieve better outcomes, and involve staff in pilot projects so they feel ownership instead of being passive recipients (and wondering what will happen when it finally gets to them).

By making employees partners in the process, you not only reduce resistance but also surface valuable insights from the people closest to the work. That collaboration is what turns AI from a technology initiative into a business-wide transformation.

Conclusion: Your Next Steps

AI transformation is not a side project. It touches every part of your business. But you don't need to do everything at once. Start with value anchoring and data readiness. Build executive fluency. Run a process audit and test with pilots. Invest in a platform that can scale. Use open source wisely. Put monitoring and continuous improvement at the center.

Expect the process to take time, but also expect results if you approach it systematically. The companies that move now, with a clear plan, will be the ones ahead in three years. The ones that wait will be scrambling to catch up.

The next step is straightforward: think about the processes that can benefit from AI and what needs to happen to get there. Choose a subset to get started. That will give you a direction in which to start moving. Once you have momentum, you will find that the transformation builds on itself.